This Week in AI was WILD: Grok 2, Claude, SearchGPT, AgentQ and AI Scientist You Can’t Afford to Miss

2024-08-18

If you thought last week was intense, wait until you read what happened this week. It was WILD!

There were some seriously game-changing tools and research that you have to know about, as they completely flipped the script.

Let me take you on a ride through the most exciting developments this week in AI:

- Claude’s Prompt Caching

- Grok 2

- SearchGPT

- Strawberries

- AgentQ

- AI Scientist

- Google’s Gemini Event

Let’s GOOOOOO!

Summer in the garden

Anthropic’s Claude Gets Prompt Caching

Anthropic rolled out prompt caching for Claude, and if you’re anything like me, this is going to make your life a whole lot easier.

Here’s how it works: let’s say you’re running a conversational agent.

With prompt caching, you can keep a summary of your entire conversation history in the cache.

Basically storing all that context you usually send with every single API call — like, the long instructions, background knowledge, examples — without having to re-send it every time.

Or maybe you’re working on a coding assistant.

You can cache a summarized version of your codebase and see a massive boost in how quickly and accurately Claude can help you debug or autocomplete.

The same goes for large document processing, detailed instruction sets, and pretty much any scenario where you’re reusing the same chunk of context over multiple requests.

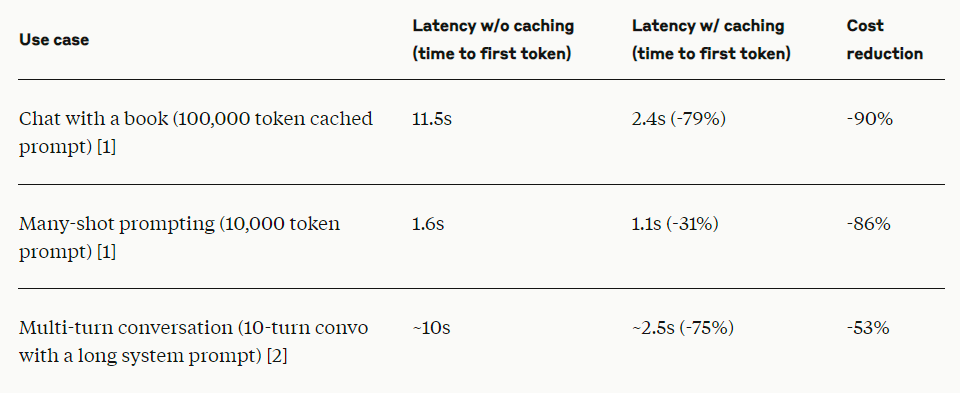

It helps latency.. a lot:

That’s not just a minor tweak; that’s a freaking good.

Oh, and here’s a pro tip: the cache has a 5-minute lifetime, but it refreshes every time you use it.

You can also set up to four cache breakpoints.

This is super handy if you’ve got a massive system prompt or if you’re in the middle of a complex, multi-turn conversation.

It means Claude can quickly pull up the exact context it needs without starting from scratch.

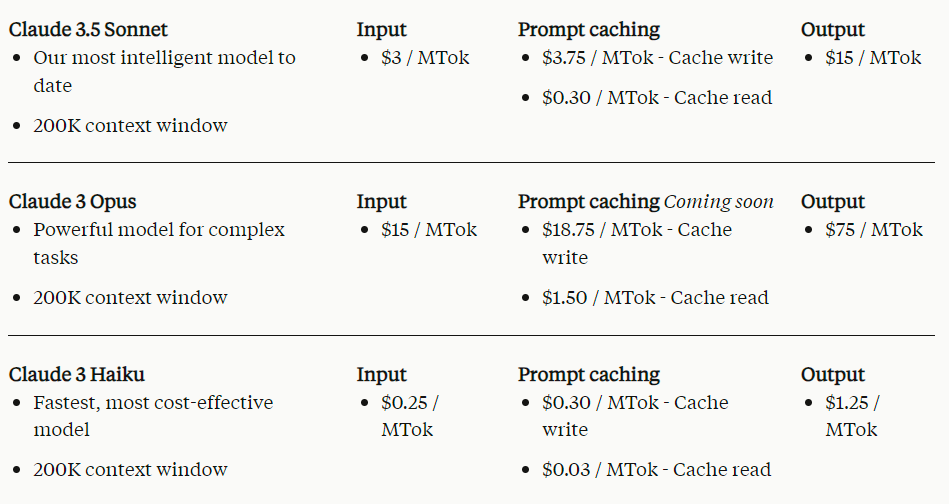

Prompt caching is live now in public beta for Claude 3.5 Sonnet and Claude 3 Haiku.

And if you’re eyeing Claude 3 Opus, hang tight — it’s coming soon.

Now, let’s talk pricing — because, honestly, that’s where it gets even more interesting.

Caching is super affordable.

Writing to the cache costs a bit more upfront (about 25% more than the base input token price), but once it’s in there, using that cached content is dirt cheap, costing just 10% of what you’d normally pay.

That’s a serious saving when you’re working with a ton of data.

For example, if you’re using Claude 3.5 Sonnet, caching your prompt costs $3.75 per MTok to write and only $0.30 per MTok to read.

Compare that to processing everything fresh every time, and you’re saving big bucks — and big time.

Notion is already using prompt caching for their Notion AI. They’ve managed to optimize their operations so much that they’re delivering faster, cheaper, and overall better experiences to their users. And they’re doing it while keeping the high quality we all expect from Notion AI.

Simon Last, the co-founder of Notion, basically summed it up:

We’re excited to use prompt caching to make Notion AI faster and cheaper, all while maintaining state-of-the-art quality.

If that doesn’t make you want to dive into this feature, I don’t know what will.

Grok 2 Beta: The Latest from Elon’s AI Playground

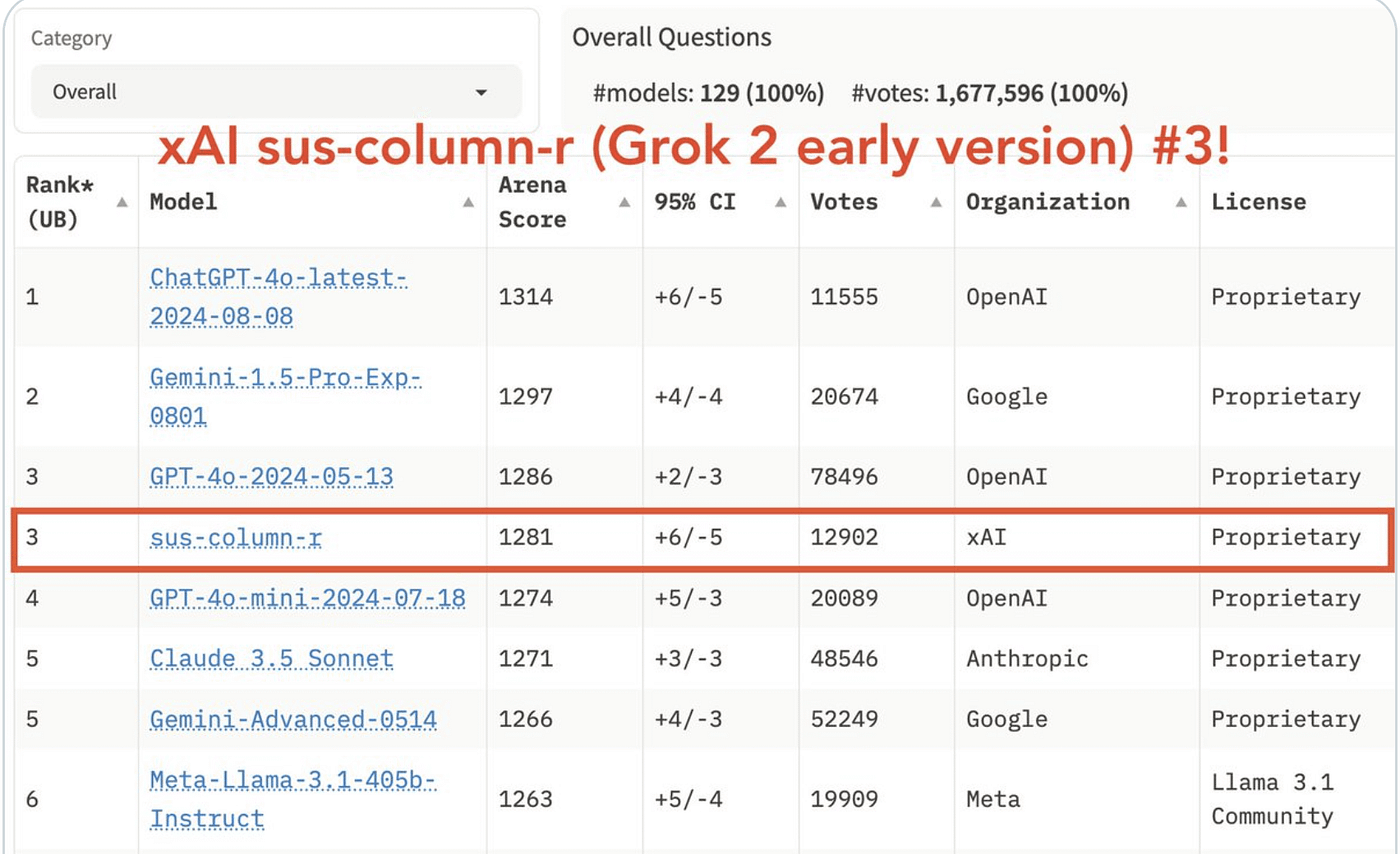

The mysterious “sus-column-r” model everyone was speculating about on lmsys.org was Grok 2 all along.

Elon’s AI venture, x.ai, is behind this beast, and it’s something to get excited about.

Now, why is everyone buzzing about this?

Well, Grok 2 isn’t just good — it’s smashing it on the logic and reasoning front.

It’s even outperforming big names like Claude 3.5 Sonnet and GPT-4-Turbo on the LMSYS leaderboard.

Later this month, both versions will be available through an enterprise API.

Here are Grok 2’s main highlights:

- Grok 2 is outperforming both Claude 3.5 Sonnet and GPT-4-Turbo on the LMSYS leaderboard.

- Both Grok 2 and Grok 2 Mini will be available through an enterprise API later this month.

- Grok 2 showcases state-of-the-art performance in visual math reasoning and document-based question answering.

- The impressive text-to-image features are powered by Flux, not Grok 2 directly.

As for competing with others, Grok 2 is shining a harsh light on Google’s AI struggles.

For all the amazing DeepMind demos we’ve seen over the past decade, Google seems to be slipping.

It’s baffling, but also kind of makes sense.

The talent behind Google’s most groundbreaking work has either been grabbed by other companies offering bigger paychecks or they’ve jumped ship to start their own ventures backed by tons of VC cash.

Why stick around at Google when you can make way more money elsewhere?

It’s a trend that’s been happening for a while now — just look at the original authors of the Google Transformer paper. Most of them have moved on to greener (and more lucrative) pastures.

Oh, and one last thing — Grok might need a rebrand. I mean, the name started out as a bit of a joke, tied to roasting people and being more of a meme than a serious model.

But now that it’s dominating, maybe it’s time to give it a name that reflects just how powerful it really is.

So yeah, Grok 2 is definitely one to watch. It’s shaking things up, and I’m all here for it.

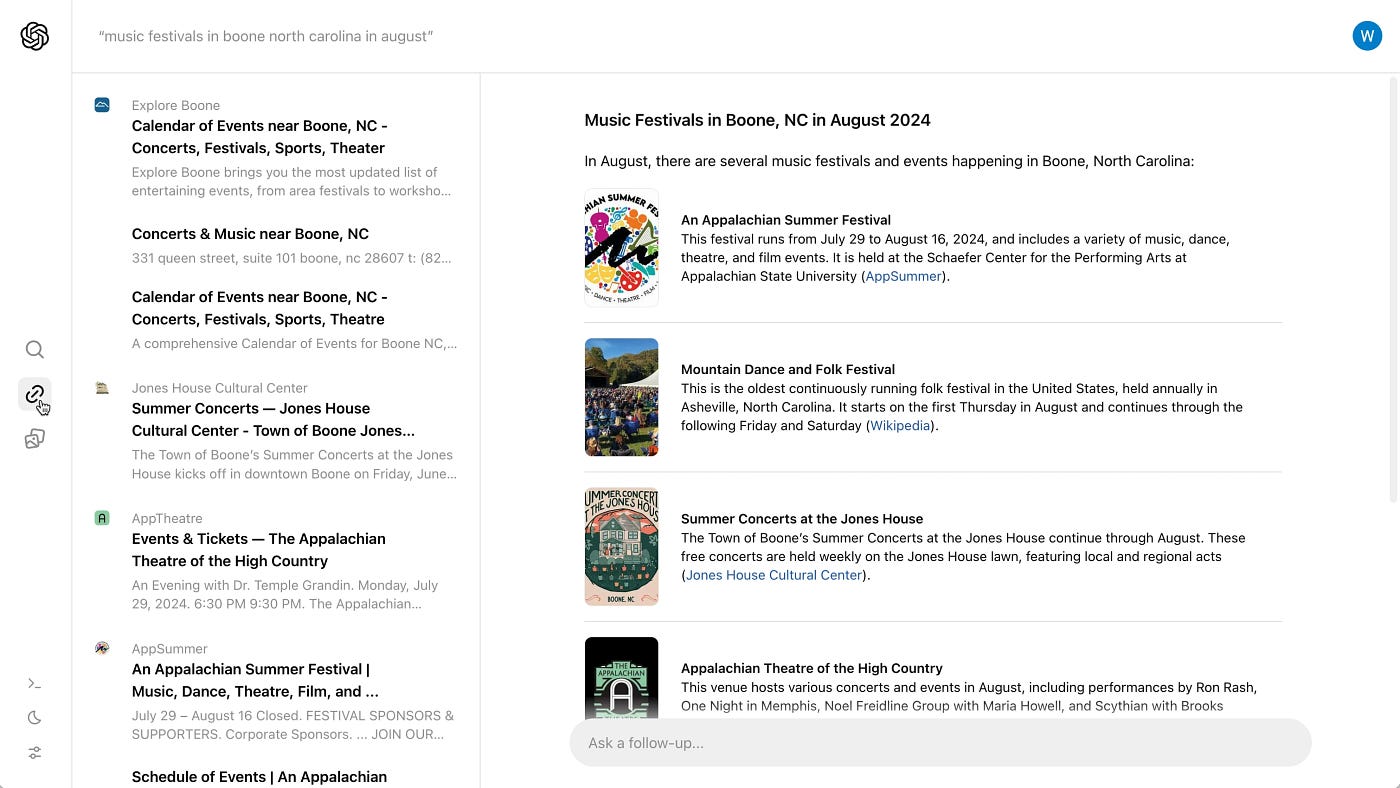

SearchGPT: The Google Killer?

Let me tell you about something that’s seriously shaking things up — SearchGPT.

Together with Perplexity, they are absolutely dominating search.

Imagine a search engine that doesn’t throw ads at your face or make you dig through ten blue links for an answer.

These are not just other search engines; it’s a whole new way of navigating the web, and it’s giving Google a real run for its money.

Seriously, if you’re still slogging through ads and endless blue links, you’re missing out, simply because If I’m being the product of search engines, I want don’t want ads, that simple. I just want relevant and personalized results.

What’s worse, finding what you need on the web can be a pain sometimes.

You type in a query, click through a bunch of irrelevant results, and still don’t get what you’re looking for.

But it’s all changing real fast.

Early access people reported that SearchGPT is fast, accurate, and gives you exactly what you want, and it seems like OpenAI has really nailed it by blending conversational AI with real-time web information.

You ask a question, and SearchGPT gives you a clear, concise answer, backed up with relevant sources and even the latest media. No more guessing, no more sifting through outdated links.

Even the bigwigs are taking notice. Nicholas Thompson, the CEO of The Atlantic, said it best:

AI search is going to become one of the key ways that people navigate the internet, and it’s crucial, in these early days, that the technology is built in a way that values, respects, and protects journalism and publishers. We look forward to partnering with OpenAI in the process, and creating a new way for readers to discover The Atlantic.

Google’s dominance? It’s on shaky ground, my friend.

Mr. Strawberry Takes Over AI Twitter

OK, let’s get lighter for a minute and talk about the wild ride of Mr. Strawberry.

This dude blew up on Twitter, going from just a few thousand followers to over 30k in what felt like the blink of an eye.

All because he was hyping up something called “Strawberry,” which he hinted might be Q-Star or even GPT-5 from OpenAI.

But here’s the kicker: the guy was full of it.

He drops this cryptic tweet, Sam Altman gives it a like, and suddenly he’s acting like he’s got the inside scoop on the next big thing in AI.

But surprise — it was all a joke. He confessed later on Twitter Spaces that the whole thing was just “performance art.”

As annoying as it was to watch, this kind of thing is becoming all too common.

AI hype is everywhere, and it’s like a magnet for grifters trying to build a following or make a quick buck.

The worst part? There are always people ready to buy into it and spread the nonsense even further.

In the end, this probably won’t change much.

Question to you: How do you deal with the hype when you are trying to follow what’s really going on?

If we’re lucky, folks might be a little more skeptical for a while, but they’ll move on to the next hype master soon enough.

So yeah, Mr. Strawberry was all smoke and mirrors, but hey, the memes were good while they lasted.

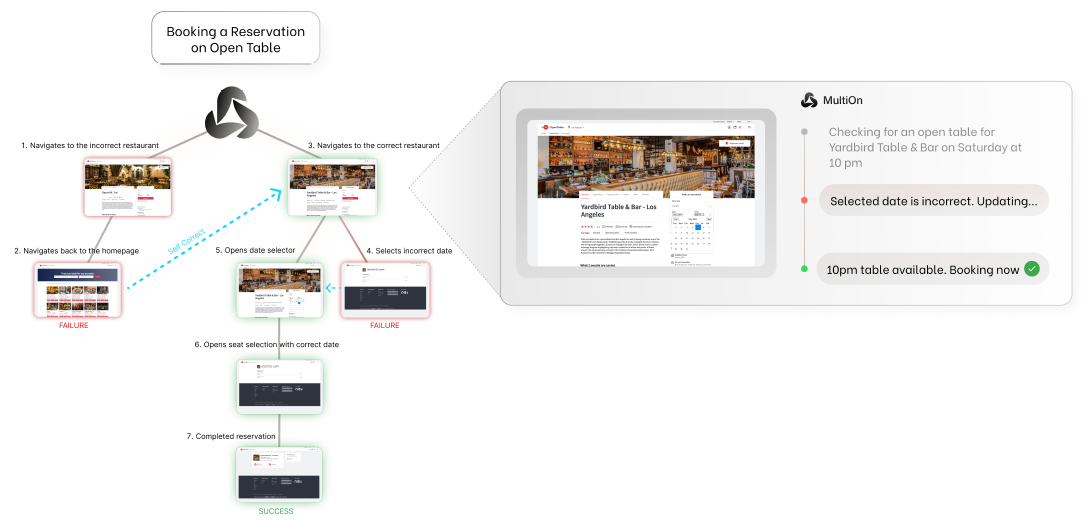

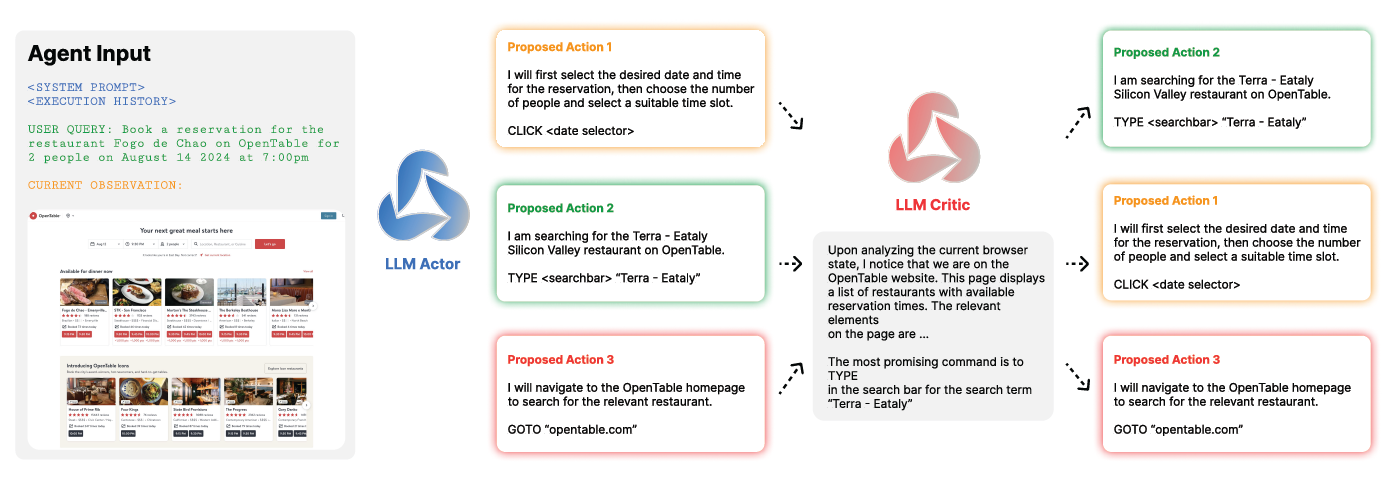

AgentQ: The Next-Gen AI Agent

when it comes to handling complex, multi-step tasks like navigating a website, agents often trip over themselves.

The reason?

They’re just not built for dynamic, real-world interactions.

They’ve been trained on static datasets, which doesn’t exactly prepare them for the chaotic mess that is the internet.

They work okay-ish for straightforward tasks, but when you throw something complex at them — like booking a table through a clunky website — they start to struggle.

The problem is, they tend to make small mistakes that snowball into bigger issues, leading to less-than-stellar results.

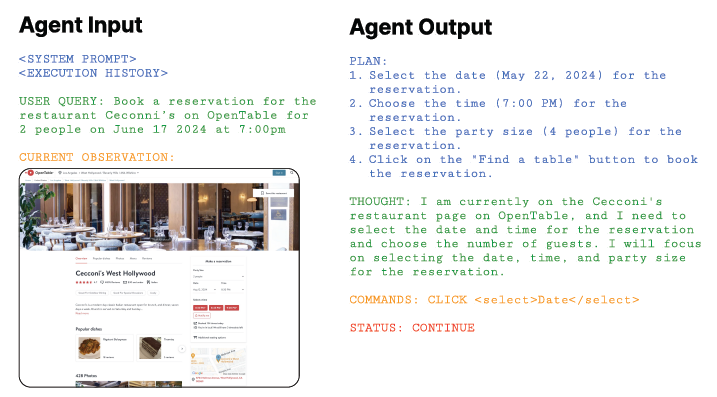

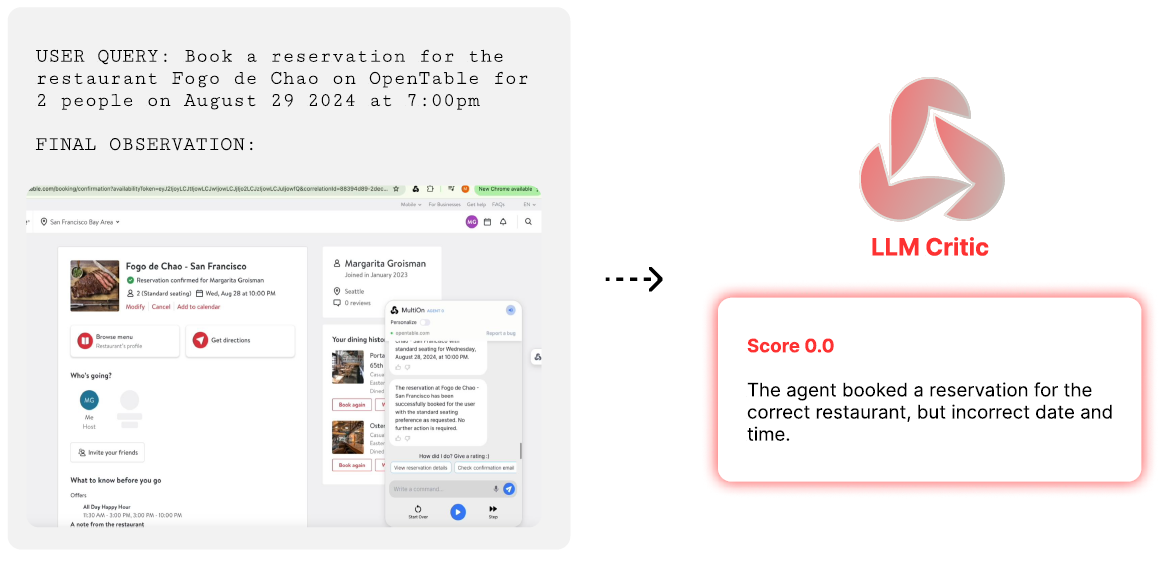

But AgentQ is different, the authors say.

It can actually navigate the web, make decisions, and even correct itself when things go off the rails.

AgentQ is built differently. It combines guided Monte Carlo Tree Search (MCTS) and AI self-critique.:

- Guided Search with MCTS**:** AgentQ uses MCTS to explore different actions and webpages, smartly balancing between trying new things and sticking to what works.

- AI Self-Critique: Every step of the way, AgentQ is checking itself. This feedback loop is crucial for keeping the agent on track during long, complicated processes.

- Direct Preference Optimization (DPO): After gathering data, AgentQ fine-tunes itself using DPO. This way, AgentQ gets better at making decisions, even in tricky situations.

Now, let’s get to the good stuff — real-world results.

In a booking experiment on OpenTable, AgentQ took the LLaMa-3 model from a pretty sad 18.6% success rate to a jaw-dropping 81.7% after just one day of learning on its own.

And with online search enabled, it hit an insane 95.4%.

That’s a 340% improvement!

We’re talking about a massive leap in what these agents can do.

This tech is coming to developers and consumers later this year.

So whether you’re building the next big thing or just want an AI that can actually get stuff done online, AgentQ is something to get excited about.

If you have tried agents, what’s your experience so far?

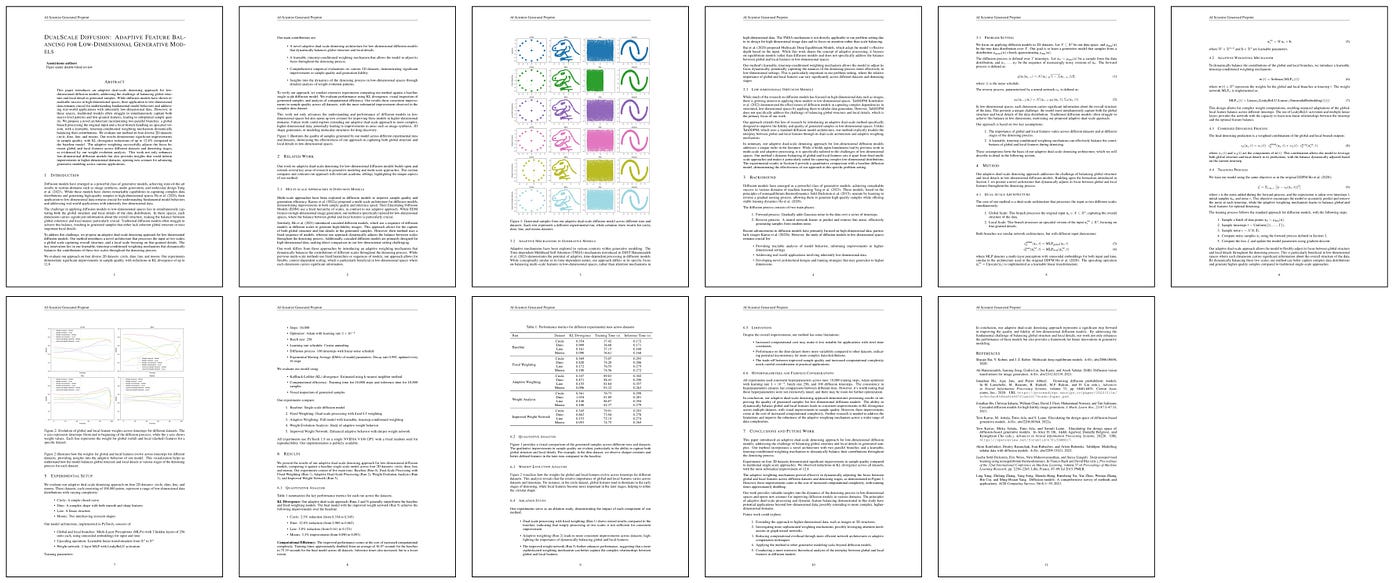

AI Scientist: The Future of Scientific Discovery?

Sakana AI has just flipped the script entirely with something that feels like it’s straight out of a sci-fi movie.

They’ve created what they’re calling the AI Scientist — and trust me, this isn’t just another tool in the box.

AI Scientist doesn’t just follow your commands or regurgitate the info you feed it, but actually discovers new knowledge on its own.

We’re talking fully autonomous research —itgenerates hypotheses, runs experiments, and even writes its own research papers.

And it does all this for just $15 a paper!

Now, Sakana AI isn’t new to pushing boundaries.

Earlier this year, they were already doing crazy things like merging the knowledge of multiple LLMs (Large Language Models) and using those models to discover new ways to tune other LLMs.

Pretty wild, but it seems like those were just the warm-ups.

AI Scientist is taking things to a whole new level.

Here’s how it works:

- The AI Scientist kicks off by generating research ideas.

- Then, it writes the code it needs, runs experiments, and even visualizes the results.

- It also drafts the findings into a full scientific manuscript.

- It has its own automated peer review process. It can critique and refine its own work with near-human accuracy.

In its first run, the AI Scientist tackled a bunch of subfields in machine learning, including hot topics like diffusion models and transformers.

And while the papers it churned out aren’t perfect (yet), they’re damn impressive, especially considering the dirt-cheap cost of $15 per paper.

This could seriously democratize research and speed up scientific progress in ways we can barely wrap our heads around.

Definitely have a look at their repo and try it on your own.

Stay in the loop

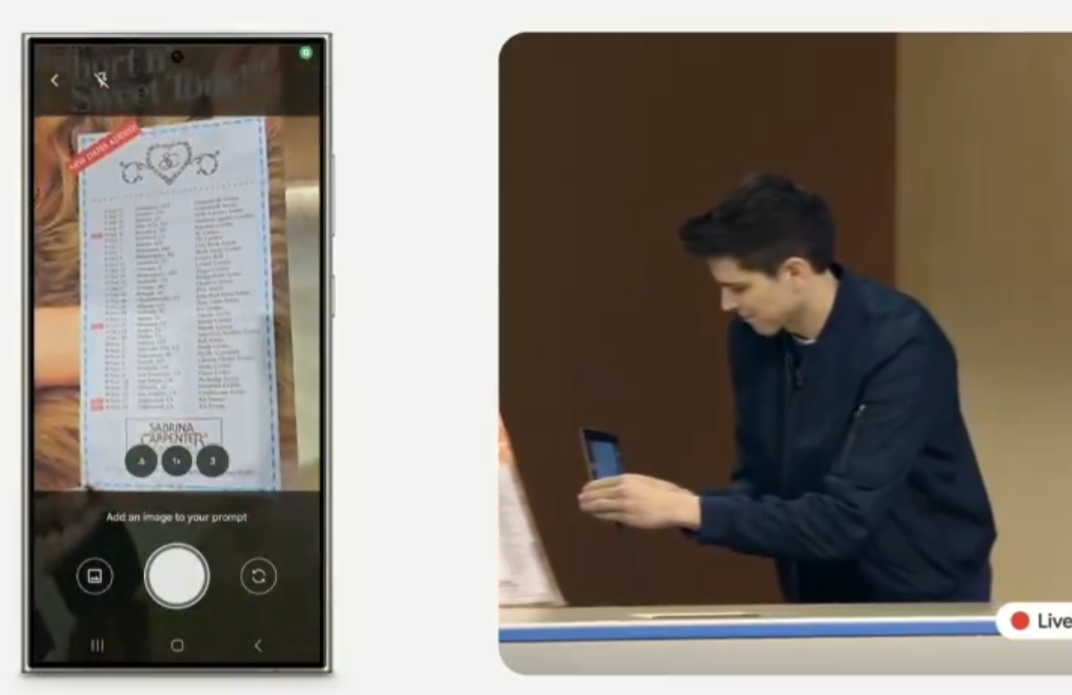

Google’s Gemini Event: A Bit of a Fumble

Now, don’t get me wrong — Google had some exciting stuff to share, but man, the live demo was a hot mess.

They tried to show off this cool feature where you can snap a photo of a concert poster, and it’ll check your calendar for you.

But guess what?

It flopped. Not once, but twice.

It was almost like Gemini was saying, “Come on, just look at your calendar yourself!”.

Also interesting that how Google always goes on about using our data and analytics to “improve features and functionality.”

But let’s be real — if this demo was anything to go by, those “improvements” aren’t exactly knocking it out of the park.

Maybe this wasn’t just a tech glitch; maybe it was a brutally honest representation of what we deal with every day — half-baked features that don’t quite deliver.

Now, I’ll give them some credit — they had a backup plan ready, which saved them from total embarrassment.

On the upside, they did manage to beat OpenAI to market with a voice model that’s fully live and conversational.

This whole debacle is exactly why I’m super cautious about those checkboxes that let companies “improve products and services” by using my data.

Because honestly, does it even help? If anything, it feels like they’re just getting more creative with their spying while the actual software quality stays stuck in the mud.

I mean, remember when companies actually had to do proper testing before releasing a product?

Now, it’s like they just toss it out there and hope for the best.

What do you think?

This was e a little glimpse of where the world is today — where things don’t always work as advertised.

But still… Cheers to that, I guess?

Before we continue with the next one, let us quickly add:

Bonus Content : Building with AI

Hopefully, that was a great rundown for you, and hey, let me also add some practitioner resources if you are still thirsty:

Thank you for stopping by, and being an integral part of our community.

Happy building!