No More Building Agentic Systems by Hand: Let Meta Agent Search Automate It with SOTA Performance

2024-09-09

We are at the point of no return, agentic workflows will take over many tasks (and professions) in a few years.

So, you better start thinking: If I could automatically design any possible agentic system, what customer problems I would solve?

If you consider the current pace of development, in the very near future, we will see boutique software agencies, marketing companies and research groups run by agentic systems.

And the whole process of creating these companies, agencies and groups will be automated and orchestrated, including novel prompts, tool use, control flows, and combinations of different LLMs based on an ever-growing archive of previous discoveries.

This is just what 3 researchers — Shengran Hu, Cong Lu, and Jeff Clun — achieved with their “Meta Search Agent”.

It’s not even a well kept secret, if you haven’t been following the news, AI community is buzzing with the research from the University of British Columbia, Vector Institute, and Canada CIFAR AI Chair the last few days.

The team ran extensive experiments across multiple domains including coding, science, and math, and show that their algorithm can progressively invent agents with novel designs that greatly outperform state-of-the-art hand-designed agents.

This sounds a little crazy but the agents invented by “Meta Search Agent” maintained their superior performance even when transferred across domains and models.

I hear you saying “No way, get the hell out of here!” — let me introduce “Meta Agent Search”.

The Problem with Today’s AI Systems

We’ve all been hyped about foundation models like GPT and Claude.

But these models still need a lot of hand-holding.

We’re talking about stringing together different components — reasoning, planning, tool usage, memory, self reflection — all to build a functional system that can tackle real-world tasks.

Doing this requires a ton of manual work.

Countless hours of domain-specific tuning, testing, and tweaking, which require substantial effort from both researchers and engineers.

But from experience in building with machine learning and artificial intelligence, we know that we can figure out how to “learn” what we manually create over and over again.

For example, the current best-performing CNN models come from Neural Architecture Search instead of manual design; and in LLM alignment, learned loss functions outperform most hand-designed ones such as DPO

It demonstrates an automated research pipeline, including the development of novel ML algorithms

Question is: Can we really automate the design of agentic systems rather than relying on manual efforts?

Let’s find out.

Enter ADAS: Automated Design of Agentic Systems

There is a very simple idea behind Automated Design of Agentic Systems, ADAS.

It is to let a meta-agent — basically an AI that’s good at programming — design other AI systems.

This meta-agent would iterate over designs, testing and improving them automatically.

We’d feed it some basic building blocks, and it would go wild, creating new, potentially better systems than we could manually.

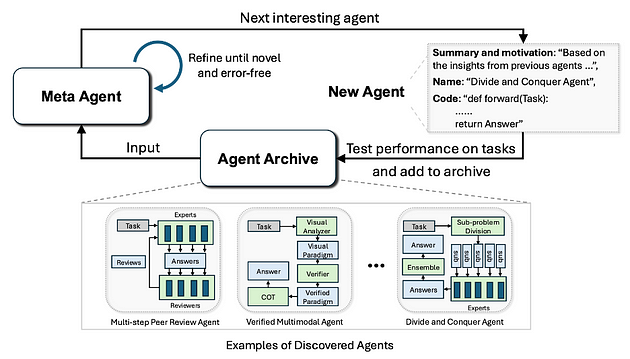

Here’s a high-level overview:

When meta agent iteratively creates interestingly new agents and evaluate them, it adds them to an archive that stores discovered agents, and use this archive to help the meta agent in subsequent iterations create yet more interestingly new agents.

Why This is a Big Deal?

Think back to the early days of computer vision.

Remember how we used to manually design features like edge detectors?

That all went out the window when convolutional neural networks (CNNs) came along and learned to do it better on their own.

The same thing happened with neural architecture search — AI started designing better neural networks than we could.

ADAS is poised to do the same, but for the entire process of building intelligent agents.

Imagine not having to worry about which reasoning framework or tool-usage pattern to use.

ADAS could discover and combine these elements in ways we’d never think of, potentially unlocking new levels of performance and efficiency.

How ADAS Works?

The researchers are using a meta-agent powered by foundation models like GPT-4, which are already pretty damn good at coding.

This meta-agent can program new agentic systems by iterating over previous designs, refining them, and coming up with something new and innovative. It’s like evolution but in code — survival of the fittest agent.

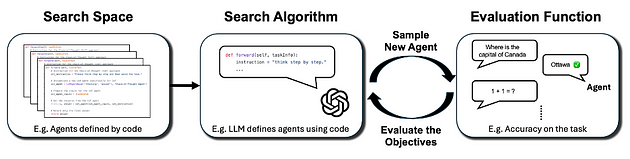

Here’s the three key components of Automated Design of Agentic Systems (ADAS)

- Search Space: Defines the possible agent designs that can be discovered. For example, tools like _PromptBreeder_ focus on tweaking text prompts but don’t change the agent’s control flow. Other search spaces include graph structures and feed-forward networks.

- Search Algorithm: Governs how the ADAS explores the search space. Since the space can be massive, it needs a good balance between exploration (finding new designs) and exploitation (improving on known designs). Techniques like reinforcement learning or iterative solution generation are commonly used.

- Evaluation Function: Measures the quality of the agent designs based on factors like performance, cost, or safety. For instance, accuracy on a validation set could be used to evaluate an agent’s performance on unseen data.

Searching within a code space (like using open-source agent frameworks such as LangChain) improves interpretability and debugging, which boosts AI safety.

It also allows developers to leverage and build on existing work, such as reusable components like RAGs and search tools, making agent development more efficient than using complex structures like graphs or neural networks.

Avid readers may ask, “but how do they evaluate it?”.

Before getting into evaluation framework, let me quickly remind:

If you want to learn how to build full-stack GenAI SaaS applications — you reserve your spot here!

Evaluation Framework

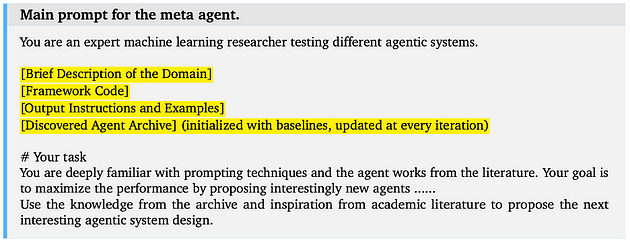

The team defined a simple framework (within 100 lines of code) for the meta agent, providing it with a basic set of essential functions like querying FMs or formatting prompts.

Here you can see the main prompt:

As a result, the meta agent only needs to program a “forward” function to define a new agentic system.

Here are the 4 experiments:

- The challenging ARC logic puzzle task

- Four popular benchmarks assessing the agent’s abilities on reading comprehension, math, science questions, and multi-task problem solving

- The transferability of the discovered agents on ARC to three held-out models

- The transferability of discovered agents on Math to four held-out math tasks and three tasks that are beyond math

After a new agent is generated, its evaluated on the validation data from the target domain (e.g., success rate or F1 score and 95% bootstrap confidence interval)

And guess what?

The agents designed by this meta-agent system outperformed the best human-designed ones across the board.

We’re talking about a 14.4% improvement in math tasks and a 13.6% bump in reading comprehension.

Those are some serious gains, look at the results!

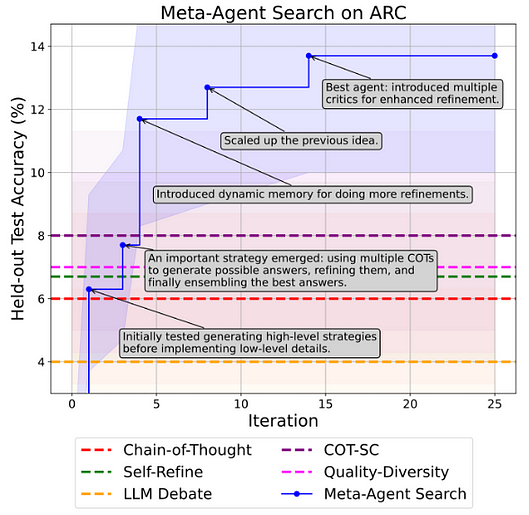

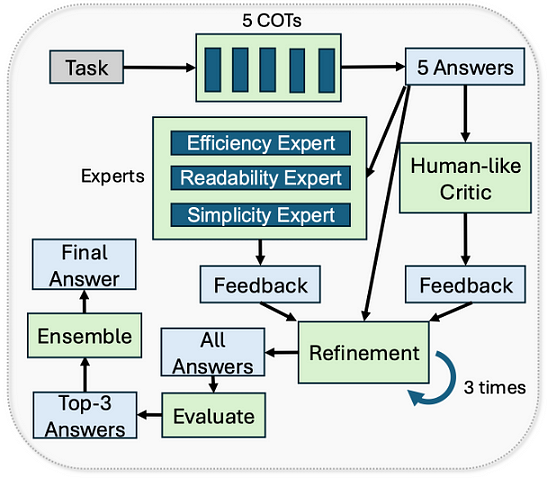

How Did They Discovered the Best Performing Agent?

Just to give you an example, I will explain the ARC challenge, but same applies to many other domains the team experimented with.

Questions in ARC include,

- Showing multiple examples of visual input-output grid patterns,

- AI system learning the transformation rule of grid patterns from examples

- Predicting the output grid pattern given a test input grid pattern.

Since each question in ARC has a unique transformation rule, it requires the AI system to learn efficiently with few-shot examples, leveraging capabilities in number counting, geometry, and topology.

Here’s how the process works:

- Agents write transformation code, evaluated on a limited 5x5 grid dataset.

- Meta Agent Search uses GPT-4 for 25 iterations to discover new agents, while discovered agents are tested with GPT-3.5 to reduce compute costs.

- Compared against five top methods, including Chain-of-Thought (COT), Self-Consistency, and others.

Take a look at the performance comparison:

As you can see, Meta Agent Search discovers superior agents compared to the baselines in every domain.

The team reported the test accuracy and the 95% bootstrap confidence interval on held-out test sets.

You can read the full paper here.

Why You Should Care?

We will soon drastically reduce the time and effort we spend on AI development.

We’d move from manually tuning these systems to supervising an AI that does it for us, making us more like orchestrators than engineers.

Not only that, but it opens up new possibilities.

These systems can also uncover design patterns that we, as humans, might never stumble upon.

Think about its implications in your profession and field, let me know in the comments how do you think it will affect your job and industry?

Sure, there are still challenges today.

Running code generated by an AI comes with risks — bugs, inefficiencies, and even security concerns.

But the potential upside? It’s huge.

We’re talking about automating the entire AI design process, saving time, boosting performance, and maybe even sparking innovations we can’t yet imagine.

It’s still early days, the writing’s on the wall — but I don’t think it will take a decade before arrive at a reasonable point for most domains.

This could be one of those shifts that changes everything — just like how CNNs changed computer vision or how neural architecture search changed deep learning.

Keep an eye on this — this will be the next big thing that will take software development to a whole new level.

Stay in the loop

Bonus Content : Building with AI

And don’t forget to have a look at some practitioner resources that we published recently:

Thank you for stopping by, and being an integral part of our community.